Nintex’s dynamic applications need test automation that keeps pace with the modern continuous delivery approach without sacrificing quality and value of the products. Although there is no all-encompassing solution to automate Azure Databricks testing, the demand for building stable tests faster with features to scale our team and testing efficiency was high. To meet these demands, we could build a productive and scalable test automation framework with decent coverage by using the native C# code and with the help of a few NuGet libraries.

Introducing the Nintex Analytics System

The backbone of Nintex Analytics System (NAS) backend is Azure Databricks as the big data service. The goal of NAS automation is to validate the end-to-end system of NAS.

NAS Automation validates –

- Data ingestion into the NAS system.

- Processing and storing of the data into Databricks.

- Verifying the standards of data quality such as accuracy, conformity, completeness, consistency, integrity, and timeliness.

- Ensure the Databricks system runs smoothly and error-free while maintaining performance and security.

- And the data is retrieved accordingly from the APIs with proper security.

The NAS Databricks system involves the below three phases and the details of the automation strategy applied in each of these phases are explained below.

- Phase I – Data Ingestion (Ingestion)

- Phase II – Data Processing (Curated)

- Phase III – Data Retrieval (Reporting)

Phase I – Ingestion

The functionality of this stage is:

- Data generated from the source systems such as Nintex Workflow Cloud, Office 365, on-premises servers, Nintex Promapp®, Nintex Forms, robotic process automation, Salesforce and Nintex DocGen®, etc is ingested into the NAS event hubs.

- The ingestion function processes the ingested events and converts them into the NAS compatible nv1 (NAS v1) message formats and stores into the respective raw blob storage containers.

Test coverage and automation strategy –

- Simulate and verify the connectivity with different source systems.

- Automation uses a

collection.jsonfile that includes events with alleventTypesacross all Nintex products.

- Automation uses a

- Verify sending the

collection.jsonevents to the NAS ingestion event hub.- Read values of

eventHubConnectionString,sharedAccessKey,blobStorageConnectionStringfor each source system from a config file, and send events to theIngestionEventHub - Validate ingestion of data is not possible without an authorized token.

- Read values of

- Verify all the ingested events are processed by the ingestion function as expected.

- Using

WindowsAzure.StorageNuGet library, set up all the blob storage accounts for raw storage and exception storage of the product.

- Using

private void SetupBlobStorage(string prodId, string blobConnStr, string blobContnerName)

{

var storageAccount = CloudStorageAccount.Parse(blobConnStr);

var bClient = storageAccount.CreateCloudBlobClient();

BlobContainer.Add(prodId,bClient.GetContainerReference(blobContnerName));

}

-

- Validate that each of the successfully processed NV1 events is stored into the respective raw blob storage container.

- Validate that the data from each source system is as per the agreed contract.

- Validate that a storage account is created if it does not exist for a product already.

- Access each raw blob storage account and verify that a JSON file exists.

- Validate events with attachment stores the attachment as a JSON file.

- Validate events with just payload and no attachment stores the payload as a JSON file.

-

- Validate invalid/incompatible messages are stored into exception storage containers.

Phase II – Curated

The functionality of this stage is:

- Data processing and data storage. The data from raw blob storage accounts are processed and stored into Databricks tables. The streaming of data in this phase happens in two stages by the below two Databricks jobs.

- Bronze job

- Silver job

What is a bronze job?

Bronze job streams data from each raw blob storage account (file in JSON format) into a flat bronze table with all the messages stored into one common table by copying the JSON file without parsing. This stage encompasses data completeness by GIGO (garbage in, garbage out). Everything stored in raw blob storages must be processed and stored in the bronze table with no loss of data.

Test coverage and automation strategy –

- Verify the Databricks jobs run smoothly and error-free. After the ingestion tests pass in Phase-I, the script triggers the bronze job run from Azure Databricks.

- Using Databricks APIs and valid DAPI token, start the job using the API endpoint

‘/run-now’and get theRunId. (More such interactive APIs to Databricks can be found in their official API documentation)

- Using Databricks APIs and valid DAPI token, start the job using the API endpoint

public async Task<int> RunAndGetRunId(string jobId, string param) { var json = "{"job_id":" + jobId + (string.IsNullOrEmpty(param)? string.Empty : ", "notebook_params":"; var request = new RestRequest("api/2.0/jobs/run-now"); request.AddJsonBody(json); var response = await this._httpClient.ExecutePostAsync(request); if (response.IsSuccessful) { int run_id; var responseContent = response.Content; var jsonObj = JObject.Parse(responseContent); run_id = jsonObj.Value<int>("run_id"); return run_id; } else { throw new Exception($"Error while starting job{jobId}, error{response.StatusCode}: {response.ErrorMessage}"); } }

-

- Poll the job status continues until the specific

RunIdis “Terminated”.

- Poll the job status continues until the specific

private async Task<string> PollJobState(string job, int run_id) { //// Poll until job is finished. Polling will be done 40 times maximum (=20 minutes) bool done = false; int pollNumber = 0; while (!done || pollNumber < 40) { pollNumber++; Thread.Sleep(30000); JObject jsonObj2 = await this.CheckJobState(run_id); var lifeCycleState = jsonObj2["metadata"]["state"]["life_cycle_state"].Value<string>(); if (lifeCycleState == "TERMINATED") { var resultState = jsonObj2["metadata"]["state"]["result_state"] .Value<string>(); if (job.Contains("Promapp")) done = resultState == "SUCCESS" || resultState == "FAILED"; else done = resultState == "SUCCESS"; if (!done) throw new Exception($"Job'{job.Trim()}'failed, life cycle state: {lifeCycleState}"); else { if (jsonObj2["notebook_output"].HasValues) return jsonObj2["notebook_output"]["result"].Value<string>(); else return string.Empty; } } else if (lifeCycleState == "PENDING" || lifeCycleState == "RUNNING" || lifeCycleState == "TERMINATING") continue; else { var resultState = jsonObj2["metadata"]["state"]["result_state"] .Value<string>(); throw new Exception($"Job'{job.Trim()}'failed, life cycle state: {lifeCycleState}"); } } return string.Empty; }

- Verify

data completeness– After the job run completes, verify all the valid NV1 events stored in raw blob storage are streamed into the bronze schema table tbl_events.- Query the bronze table data using a query job and load into a List<object>

- For each item from the List<object> as actual value, assert if it matches with the respective event from

collection.jsonfile as the expected value.

What is a silver job?

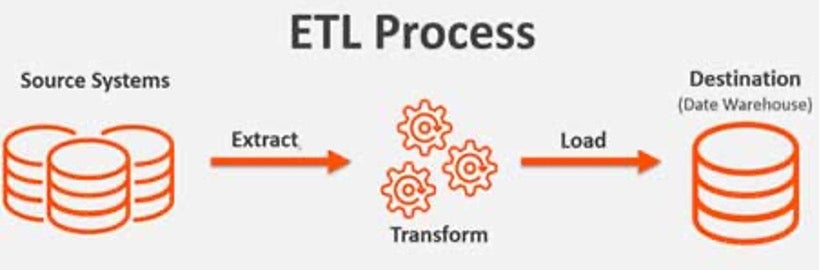

Running silver job will stream and parse data from bronze table schema into a respective silver table through the ETL (Extract, transform, load) process based on eventType by encompassing the data quality standards such as completeness, consistency, accuracy, conformity, integrity.

Test coverage and automation strategy –

- Verify ETL jobs performance by running and polling the RunId of the respective ETL silver job until its completion for each platform i.e., Workflow ETL, CRM ETL, Promapp ETL, Forms ETL, etc

- Query each silver table using query job and load it into a List<Object>

- For each item from the List<object> as actual value, assert if matches with the respective event from the

collection.json file as the expected value.

- Verify

data completeness– Validate every field from JSON message is mapped to a column and stored in silver with no loss of data.- Validate if both the events match by

correlationid,isolationid, andproductid, to ensure comparison is between the same events. - Then assert the remaining rows of the events are equal.

- Validate if both the events match by

- Verify

data integrity– Validate silver jobs consistently processes the record across notebooks with the same integrity logics applied.- Duplicate events with older or same

eventDatetimemust be ignored. - Duplicate events with the latest

eventDateTimemust be overwriting an existing record. - The same event differing any one of the unique key fields must insert as a new record.

- Delete event must overwrite its respective publish event with a delete flag set to true.

- Undelete event must overwrite respective publish event with the delete flag set to false.

- Undelete/Delete events coming earlier than publish events must be overwritten.

- Events with mandatory fields as null are filtered to be processed into the silver.

- Duplicate events with older or same

- Verify

data accuracy– Data from every column value matches exactly with the data sent in the event hub from thecollection.json- Validate the silver table stores data as per the standard data definitions specified in the contracts such as data type, size, and format, etc.

- Validate dates stored match with millisecond precision.

- Validate dates stored are in common UTC format.

- Validate float/double values match precision.

- Verify batch processing.

- Verify silver processes data in batches.

- Send data in the same batch will have no change in integrity and uniqueness.

- Send data in different batches with no change in integrity and uniqueness.

Phase III – Reporting

This is the final stage of system testing. Reporting is through a set of APIs. The requests are placed from the UI portal which will fetch data from Databricks through a Secure API. The key focus of this phase is data security and timeliness on how the data stored in the Databricks tables is retrieved and available for reporting.

Test coverage and automation strategy –

- Verify

data security – As security is the key focus of this phase, automated tests validate the key security measures imposed on data and infra.

- Validate data is accessible only through a specific cluster which is accessible through secure API.

- Validate clusters are not accessible when no permission granted for the user.

- Validate data is not accessible from tables with no proper grants given through ACLs.

- Validate PII data accessed through internal clusters is hashed and redacted.

- Validate GDPR (General Data Protection Regulation) data storage compliance that data is stored in the same region. For instance, data is stored into WUS (Western United States) Databricks and accessed from WUS secure API only.

- Verify

data consistency–

- Validate data across aggregate tables and the silver tables are consistent.

- Validate data stored in Databricks matches with what is returned from APIs.

- Verify

data validity–

- Validate API response returns as expected according to the type and number of events ingested.

- Validate data returned is according to the time zone of the request.

- For instance, API requests from Australia/NSW time zone must fetch data according to that time zone. If an event was triggered on 1st Jan 2021 9 AM AEST, and when an API request is placed from Australia, the response must show the event was on 1st Jan 2021 and when the request is placed from America/New York, the response must return that the event must be grouped to the date 31st Dec 2020.

- Verify

data–timeliness

- Validate data availability for reporting.

- Verify

query performance–

- Validate API requests can fetch data from Databricks within set timeouts.

- Validate the average query response times with the baselines.

Challenges of Databricks testing

- There’s no specific tool supporting Databricks testing out of the box.

-

- All the tests and framework components are coded in C# using NUnit NuGet.

- Databricks jobs are handled through Databricks APIs using Newtonsoft JSON.

- Azure storage containers are handled using the NuGet library

Microsoft.WindowsAzure.Storage - Authorization and tokens are generated using

Microsoft.Identity.ClientNuGet library

- Commonly used test environments are not ideal to run automated tests as the running jobs might hinder automated runs.

-

- Using dedicated Databricks environment and infra for automated tests

- Dedicated Databricks environment with limited collection file and using it over and over in the runs do not simulate the real-time scenarios.

-

- Randomizing data for the key fields in the payload and attachments in the

collection.jsonto simulate dynamic data which addresses the issue of simulating real-time events up to some extent. - Generates unique guides for

correlationId,uniqueId,blobURls, for every run. - Generate dynamic DateTime values for

eventDateTime,statusDateTime,startDateTime,endDateTime. - Write dynamic blob files to a specified path in ingestion blob storage accounts using a

templateblob.jsonas an attachment.

- Randomizing data for the key fields in the payload and attachments in the

- Complex Databricks validations

-

- Validates events with no attachments as well as with attachments.

- Validates time zone tests. APIs must return responses according to the time zone of the request placed.

- Validates struct fields from silver tables such as Asset, Actions, and Entitlements, etc.

- Validates data from nested JSON.

- Tightly coupled tests having a high dependency on pre-setup

-

- Isolating and running tests or keeping them independent is almost impossible with Databricks since the average job run time is 10 minutes and running these jobs for each test is impractical.

- Leveraging NUint’s

OrderAttributeto determine the order of prerequisite setup as well as theTestFixture

- Leveraging NUint’s

- Push data in batches and run jobs seem a suitable approach, however, tests are tightly coupled in the sense that isolating a particular test and running does not work. For instance, isolating and running a specific silver test is not the right thing in this context as the silver needs ingestion and bronze setup as prerequisites.

- Isolating and running tests or keeping them independent is almost impossible with Databricks since the average job run time is 10 minutes and running these jobs for each test is impractical.

- One round of test execution takes 2 hours.

-

- Test setup time is high with an average run time of each Databricks job is 10 minutes with sizeable performance clusters. So, no reduction in this time is possible.

- Tests from the same fixtures are asynchronous and quick though.

- Testing multiple regions since NAS covers 5 different regions – WUS, NEU, AUS, JAE, and CAC

-

- The automation system is enabled to run in a specified region by reading predefined secrets from the pipeline.

In short, even though there is no out-of-the-box tool support for Databricks testing, using the native C# code and with the help of a few NuGet libraries, a majority of the Databricks testing can be automated.

Interested in exploring more engineering posts from our team here at Nintex? Click here to discover more.