Major technological disruptions have often encountered skepticism and apprehension when first introduced. The invention of cars in the early 1900s was openly criticized and even ridiculed. Online and digital banking was considered flawed due to concerns over security and privacy. Even when the cloud was first introduced, people had several misgivings about its security, reliability, and privacy.

But the majority of organizations have now adopted cloud technology, with nearly six out of ten businesses having transitioned their work to the cloud by 2022. With the introduction of ChatGPT and other such tools powered by generative AI, such fears have resurfaced. This has not only evoked wariness and concerns around security and privacy by enterprises, but also necessitated proactive government regulations.

In a recent collaboration with consultants from Zinnov, we explored the following themes related to generative AI…

Concerns associated with generative AI

- Privacy and security: Generative AI enables the creation of fake news and impersonation, facilitating more effective social engineering cyber-attacks. It also raises concerns about the authenticity of photographic evidence. The privacy and security concerns are legitimate, as evidenced by Italy’s ban on ChatGPT due to data collection worries. Furthermore, organizations are at risk of sensitive information leaks, as seen in the case of Samsung employees leaking sensitive information to ChatGPT.

- Reliability and control: Information management becomes more challenging without knowledge of its source and provenance. Generative AI isn’t perfect. Outputs from generative AI models are prone to cognitive biases since they are trained on datasets created by humans. The lack of reliability in ChatGPT and other generative AI-based tools can result in biases during the learning process, a primary reason why the education sector (in particular) opposes their use.

- Intellectual property: Generative AI poses various intellectual property concerns, including potential copyright infringement by training on copyrighted material, leading to claims of plagiarism and disputes over works protected by IP laws such as patents or trade secrets. The use of generative AI for creating designs and other creative works raises ownership and copyright questions. The music industry presents a fascinating case study. Google’s MusicLM, which is trained on an extensive dataset of 280,000 hours of music, can generate music solely from textual descriptions. However, they refrained from releasing music primarily due to copyright concerns raised by the music industry.

- Sustainability: Running complex computations demands significant energy consumption. As an example, training GPT-3 uses 1.287 gigawatt hours, roughly equivalent to the energy consumption of 120 U.S. homes for a year.

- Job losses: People across the globe are worried about losing their jobs due to generative AI. Goldman Sachs predicts that the latest wave of artificial intelligence could automate approximately 300 Mn full-time jobs worldwide.

- Cost: The expenses associated with generative AI can be substantial, due to the requirement of powerful computing systems and resources for training and executing complex models.

Best practices for generative AI deployment

It is important to approach generative AI with a balanced perspective, recognizing its potential benefits while addressing any legitimate concerns. Responsible development, ethical guidelines, and ongoing research are crucial to ensure that generative AI can create social value as well as economic wealth.

- Deploying proactive security measures: Phishing attacks can compromise sensitive information, particularly when phishing messages are created by leveraging ChatGPT, which iterates on the responses and fine-tunes it to a specific target persona. To combat this, email security providers like SlashNext are fighting AI with AI by identifying and blocking scam messages generated by ChatGPT using their BEC generative AI. NVIDIA Morpheus is another solution that defends its users against spear phishing, using a combination of generative AI and its own cybersecurity framework. Undertaking regular internal cybersecurity validations will be important to maintain a strong privacy and security position.

- Verifying content accuracy: Biases in LLM models are inevitable but by tuning instructions, and regularly auditing and monitoring model performance, concerns around reliability and control can be addressed. Several solution providers are developing products to evaluate biases in LLM models related to gender, sexual orientation, race, age, nationality, religion, and political nuances.

- Undertaking green initiatives: Training AI systems is a resource-intensive process that demands significant amounts of energy. Implementing energy-saving technologies, such as liquid cooling and renewable energy sources in data centers, developing “green AI” an eco-friendly and energy-efficient technology or even utilizing smaller models, could increase green AI savings by up to 115%.

- Investing in effective reskilling: Throughout history, we have witnessed that technological innovations displace workers initially. However, these innovations have led to employment growth in the long term. While some job roles may become obsolete, newer job roles will emerge, such as AI ngineering and transformer learning. Technological progress will foster the creation of high-value jobs, driving innovation and economic growth. To protect jobs, organizations will need to consider how and when to invest in employee reskilling programs to support changing work priorities.

- Optimizing expenses: Leveraging pre-trained models instead of building models from scratch, and ransferring learning techniques, etc., can save time and computational resources. By using training models like GPT and BERT, which provide a range of pre-trained options, organizations can reduce the overall cost of development and deployment. These pre-trained models can be applied strategically across various applications.

Figure 1: Best practices for generative AI

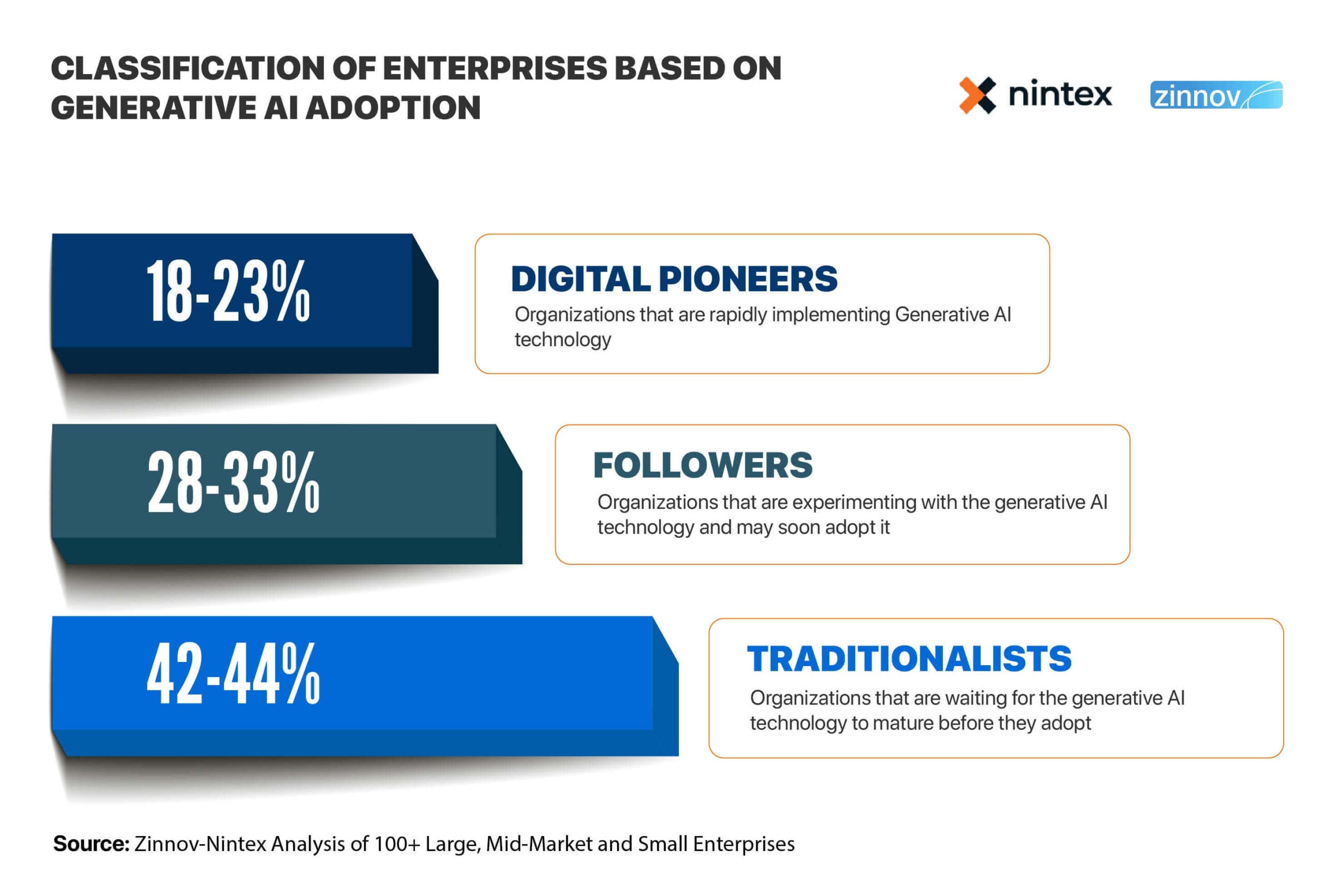

Despite some valid concerns and apprehensions regarding generative AI, many organizations are rapidly exploring and experimenting with its various applications.

Figure 2: Classification of enterprises based on generative AI adoption

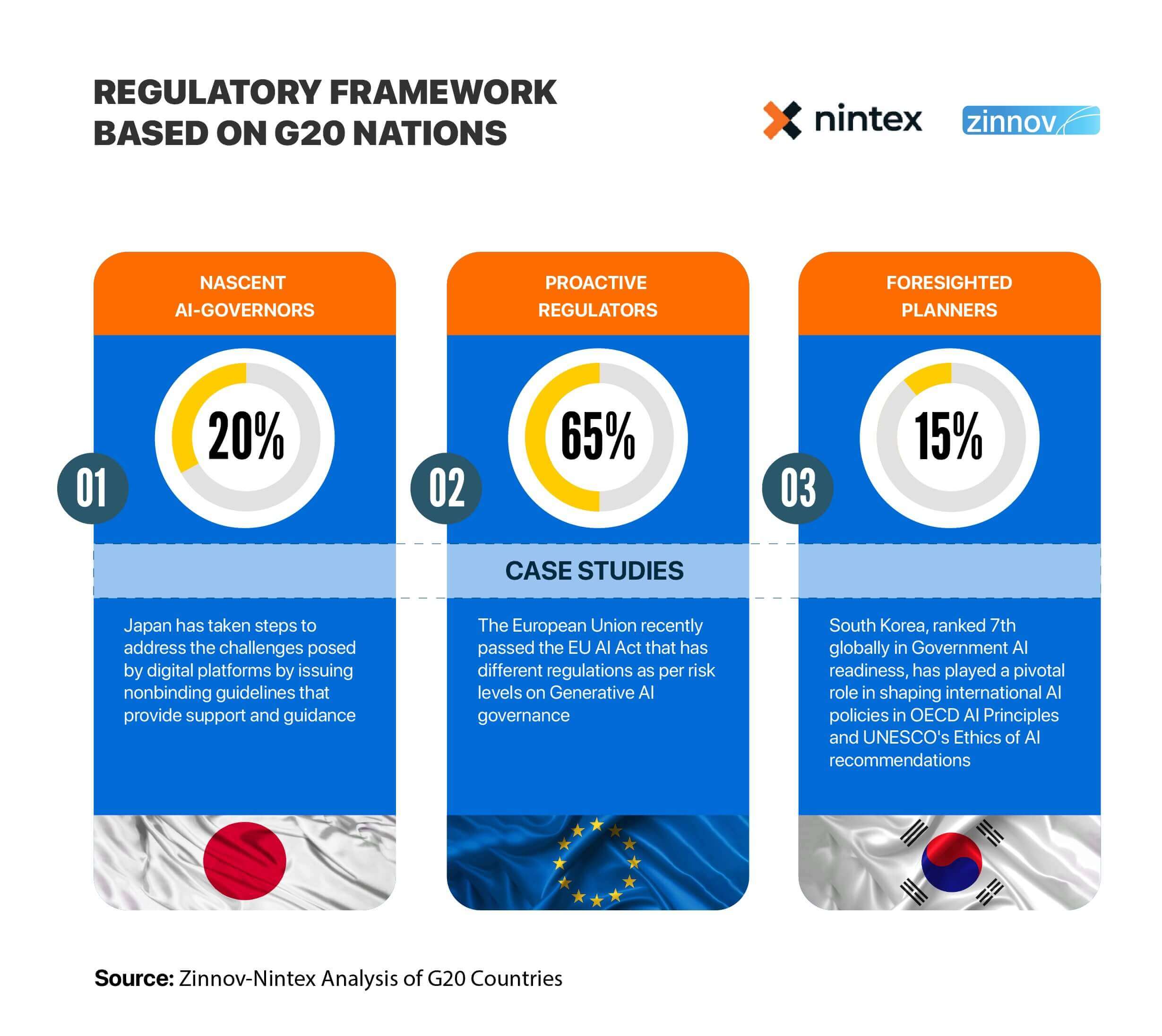

As the use of generative AI becomes ubiquitous, several countries are exploring ways to regulate its use. The United Kingdom has announced plans to regulate AI, while the European Union has proposed a European AI Act to establish a regulatory and legal framework for AI in Europe. Governments of the G20 nations are taking a position on generative AI, and most nation states are falling into one of the following three camps –

Nascent AI governors: Governments that haven’t passed any legislation around AI or initiated regulation/drafting processes. Countries like South Africa and Japan are in the early stages of pursuing regulatory reforms, allowing AI usage for positive social impact and achieving regulatory objectives.

Proactive regulators: Governments that have implemented regulations and laws surrounding AI use and its privacy concerns. Countries like Brazil, China, and Canada have already set regulations in place for AI to ensure positive social impact and streamline its usage.

Foresighted planners: In addition to enacting legislation, states have taken proactive measures by training personnel, and establishing centres of excellence to address anticipated problems and effectively drive adoption of the technology in future. Countries like South Korea and Australia have taken a step towards future advancements in the technology.

Figure 3: Regulatory framework based on G20 Nations

What the future holds for generative AI adoption

Generative AI is a rapidly evolving technology, and the risk and opportunity landscape is poised to change quickly. While there is a lot of potential, it is important to be mindful of the potential risks and responsibilities associated with it.

In the future, we can expect AI models to become increasingly powerful and sophisticated. We can already see the latest technological advancements resulting in better safeguards, such as GPT-4’s framework to reject illegal requests that have been categorized in their policy guidelines.

Moving forward, organizations must balance the responsible deployment of this technology while continuously innovating for growth. With a pragmatic approach, generative AI can usher in a new era of efficiency and creativity for businesses. However, reckless implementation risks unintended harm.

The prudent path forward is one of cautious optimism, ethical development, and proactive governance. Businesses should audit generative AI usage, provide transparency, and ensure security of applications. Policymakers need forward-thinking regulations to encourage innovation while protecting society’s interests. Employees must receive support through reskilling and job transition programs.

If stewarded responsibly, generative AI can augment human potential and boost progress. The promise outweighs the perils if we collectively choose responsibility over recklessness, creativity over chaos, and progress over peril. The future is unwritten, and our choices today will shape the generative AI landscape for decades to come.

Written in collaboration with consultants at Zinnov, namely Nischay Mittal, Prankur Sharma, Ridhi Kalra Madan, Aayra Angrish and Pooja Ghate.